Filtering Text Using LLMs

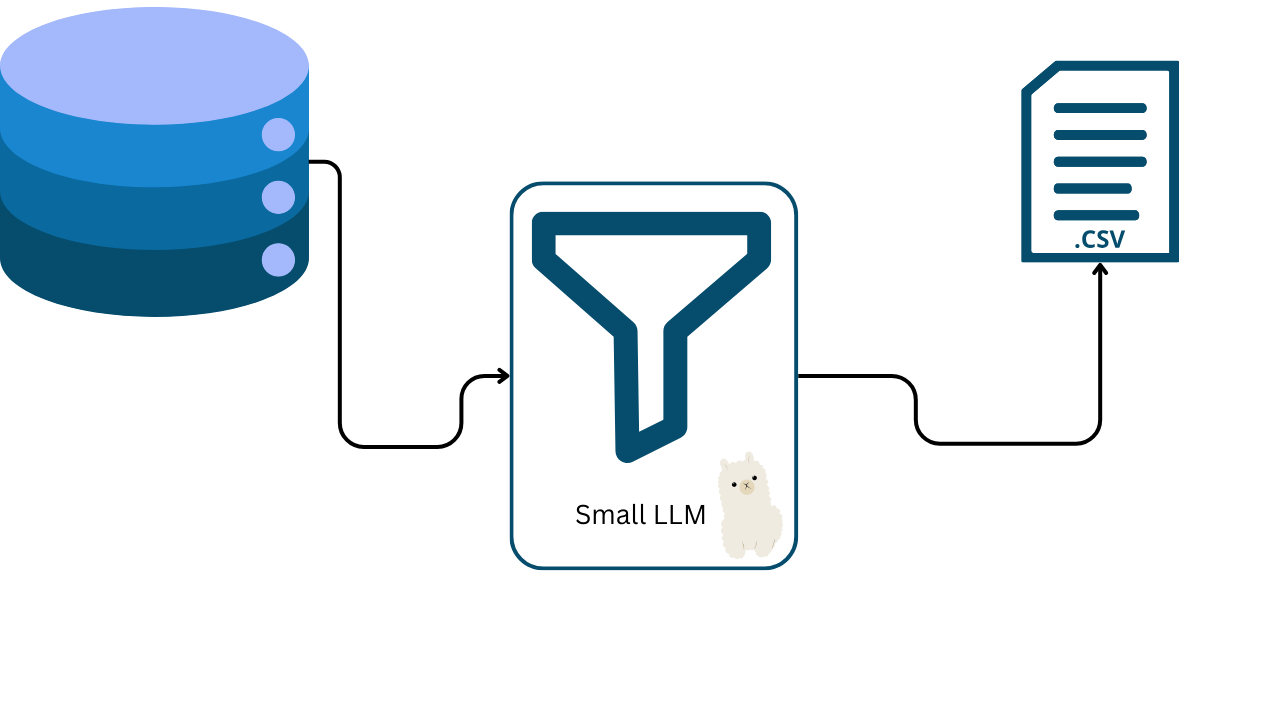

In the ever-evolving landscape of natural language processing (NLP), efficient data preparation is crucial for building high-performing language models , filtering text using large language models (LLMs) is a powerful approach to ensure that your dataset is both relevant and clean, making it suitable for pre-training and fine-tuning sophisticated models.

This guide delves into how LLMs can be leveraged for text filtering, specifically focusing on the benefits of using the Phi-3 model, which is optimized for tasks requiring deep contextual understanding and handling large amounts of text.

Why Use LLMs for Text Filtering?

Large language models, like Phi-3, are designed to understand and generate human-like text based on extensive training on diverse datasets. When it comes to filtering text LLMs offer several advantages:

Contextual Understanding: LLMs can interpret the nuances and context of the text more accurately than traditional keyword-based methods, this helps in identifying and classifying content based on subtle and complex criteria.

Flexibility: LLMs can be adapted to various filtering tasks, such as detecting sensitive information, categorizing content into different themes, and ensuring compliance with predefined standards.

Scalability: With the ability to process large volumes of text, LLMs can handle extensive datasets efficiently, making them ideal for filtering tasks that require high throughput.

Consistency: By using a model trained on diverse datasets, you achieve more consistent and reliable filtering results compared to rule-based systems.

Why Choose the Phi-3 Model?

The Phi-3 model, particularly the microsoft/Phi-3-mini-128k-instruct variant, is well-suited for text filtering due to its unique features:

Size and Efficiency: The Phi-3 model is compact yet powerful, offering a good balance between performance and computational resource requirements. This makes it suitable for a variety of filtering tasks without excessive overhead.

Large Context Window (128k Tokens): One of the standout features of Phi-3 is its large context window, which allows the model to process and understand up to 128k tokens in a single pass. This capability is essential for handling long documents and maintaining coherence across extensive text, enhancing the accuracy of filtering and classification tasks.

Contextual Understanding: Phi-3 excels in understanding the context of text due to its advanced training and architecture. This deep contextual comprehension is crucial for filtering text with nuanced criteria, such as identifying sensitive information or categorizing content based on complex themes.

Our Goal

The primary goal of filtering text using LLMs is to prepare high-quality datasets for subsequent pre-training and fine-tuning of language models. By ensuring that the data is clean, relevant, and properly categorized, you lay a solid foundation for training models that perform well on a wide range of NLP tasks.

Properly filtered data improves the efficiency of the training process and enhances the model's ability to generate accurate and contextually appropriate responses.

Code Example

Login to Hugging Face

This command logs you into your Hugging Face account via the command line. It uses the --token flag to provide your authentication token, allowing access to download and use models from Hugging Face’s hub.

Make sure to replace the token with your actual one to ensure proper access.

! huggingface-cli login --token XXXXXXXXXXXXXXXXXXXXXXXxxxxxxxxx

Importing Libraries

We import the necessary libraries to perform the text filtering task.

from transformers import pipeline

from datasets import load_dataset

from tqdm import tqdm

import pandas as pd

import re

import json

Downloading the Phi-3 model

We download the Phi-3 model from Hugging Face.

pipe = pipeline("text-generation",

model="microsoft/Phi-3-mini-128k-instruct",

trust_remote_code=True)

Define the system prompt and user prompt

In the first part, the system_prompt sets the context by describing the model as a tool for filtering and categorizing text based on specific topics.

The user_prompt, on the other hand, outlines the task for the model: to analyze the provided text and classify it according to the topics of Safety, Privacy, Harassment, Hate Speech, and Misinformation , the results should be formatted in json, with each entry including the original text and its classification for each topic, specifying Yes or No for relevance.

system_prompt = """

You are a text classification model with the ability to filter and categorize texts based on specified topics.

"""

user_prompt = """

Please process the provided text and filter it according to the following topics:

1. Safety

2. Privacy

3. Harassment

4. Hate Speech

5. Misinformation

For each text, determine whether it is relevant to these topics and categorize it accordingly. Return the results in JSON format, where each entry includes the original text and its classification for each topic.

**Format:**

```json

[

{

"classification": {

"Safety": "Yes/No",

"Privacy": "Yes/No",

"Harassment": "Yes/No",

"Hate Speech": "Yes/No",

"Misinformation": "Yes/No"

}

},

]

```

"""

Define the filter run function

First it sets up a list of messages that includes a system prompt to define the model's role, a user prompt detailing the filtering task, and the actual text to be classified.

After that it specifies generation_args for generating text including parameters such as max_new_tokens to control the length of the output, temperature for controlling randomness .

def run_filter(text) :

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt},

{"role": "user", "content": f"My text : {text}" }

]

generation_args = {

"max_new_tokens": 2048,

"return_full_text": True,

"temperature": 0.0,

"do_sample": False

}

output = pipe(messages, **generation_args)

return output[0]['generated_text'][3]['content']

Next the function uses the pipe to process the messages with these arguments and returns the relevant part of the generated output as a json-formatted text classification result.

Define The json extract function

The extract_json_from_string function takes a string as input and returns a list of json objects and any errors that occurred during the parsing.

def extract_json_from_string(text):

objs = []

errors = []

# Use regex to find JSON-like structures in the text

matches = re.findall(r'{.*}', text, re.DOTALL)

if not matches:

errors.append("No JSON patterns found")

for index, json_string in enumerate(matches):

try:

# Load JSON object

json_obj = json.loads(json_string)

objs.append(json_obj)

except json.JSONDecodeError as e:

errors.append((index, json_string, f"JSONDecodeError: {e}"))

except Exception as e:

errors.append((index, json_string, f"Error: {e}"))

return objs, errors

Define the dataset filter function

First it loads the dataset specified by ds_name and split, then retrieves the target column containing the texts.

The function starts by initializing an empty list, df_dict, to store the results , it then processes each text by applying the run_filter function to obtain classification results and uses extract_json_from_string to parse these results , if errors occur during parsing the error messages are appended to the errors list and each processed text along with its classification and any errors is added to df_dict.

def dataset_filter(ds_name , split , target_column , save=False) :

ds = load_dataset(ds_name , split=split)

text_list = ds[target_column]

df_dict = []

# Process each text in the list

for i in tqdm(text_list, desc="Processing texts"):

result = run_filter(i)

objs, errors = extract_json_from_string(result)

# Handle cases where objs or errors might be empty

classification = objs[0]['classification'] if objs else None

error_info = errors[0] if errors else None

# Append results to the list

df_dict.append({

"text": i,

"classification": classification,

"errors": error_info

})

df = pd.DataFrame(df_dict)

classification_df = pd.json_normalize(df['classification'])

final_df = df.drop(columns=['classification']).join(classification_df)

if save :

final_df.to_csv(f"{ds_name.rsplit('/')[-1]}-{target_column}-Filtered.csv", index=False)

return final_df

Once all texts are processed, the function converts the list into a DataFrame and normalizes the classification data into separate columns.

If save is True it saves the final DataFrame to a csv file named according to the dataset and column names , the function in the end returns the DataFrame with the filtered and classified texts.

Usage Example

new_df = dataset_filter(

ds_name="ayoubkirouane/Small-Instruct-Alpaca_Format",

split="train",

target_column="response",

save=True

)

ds_name: specifies the dataset"ayoubkirouane/Small-Instruct-Alpaca_Format".split: indicates that the"train"split of the dataset should be used.target_column: targets the"response"column for filtering.save: set toTrueto save the filtered results to a csv file.

The output of new_df will contain the filtered and classified data and a csv file with the filtered results will be saved in the working directory.