Accelerating Large Language Models with TensorRT-LLM

In our previous discussion, we explored the capabilities of NVIDIA TensorRT for accelerating deep learning inference. Today, we extend that exploration to TensorRT-LLM, a specialized open-source library designed to optimize and accelerate Large Language Model (LLM) inference on NVIDIA GPUs.

TensorRT-LLM builds on TensorRT’s performance advantages to enhance LLM deployments, enabling powerful models like Meta’s Llama and Mistral to run efficiently not only in data centers but also on Windows PCs with RTX GPUs. This library offers up to 8x faster inference performance through advanced techniques such as in-flight batching and tensor parallelism, making it possible to run cutting-edge AI applications locally.

By leveraging TensorRT-LLM, developers can reduce their reliance on cloud infrastructure, resulting in cost savings and improved data privacy, all while achieving top-notch performance. With upcoming updates poised to offer even greater integration with popular models and tools, TensorRT-LLM is set to make high-performance LLM capabilities accessible across a range of platforms.

Code Time

Installing TensorRT-LLM and Testing the Installation

Here we first update our system packages and install essential dependencies like Python 3.10, pip, OpenMPI, Git, and wget. We then install TensorRT-LLM (version 0.8.0) via pip, sourcing it from NVIDIA's PyPI repository to ensure compatibility with Tensor Core GPUs.

apt-get update && apt-get -y install python3.10 python3-pip openmpi-bin libopenmpi-dev git git-lfs wget

pip3 install tensorrt_llm==0.8.0 -U --extra-index-url https://pypi.nvidia.com

After installing TensorRT-LLM, we quickly verify the setup by importing the library. If the installation is successful, the output should confirm the version of TensorRT-LLM as 0.8.0.

python3 -c "import tensorrt_llm"

We should get : [TensorRT-LLM] TensorRT-LLM version: 0.8.0

Installing Requirements and Cloning a Model Example

Next, we clone the TensorRT-LLM repository at version 0.8.0 and navigate to the example directory for a supported model, such as GPT-2. Although GPT-2 is used here as an example, TensorRT-LLM supports various models, and you can substitute this with any other supported model of your choice. We then install the necessary dependencies from the requirements.txt file, preparing the environment for working with the selected model in TensorRT-LLM.

git clone --branch v0.8.0 https://github.com/NVIDIA/TensorRT-LLM.git

cd TensorRT-LLM/examples/gpt

pip install -r requirements.txt

Here we clone the GPT-2 model repository from Hugging Face directly into the TensorRT-LLM example directory. We then clean up unnecessary files, keeping only the pytorch_model.bin file (if available), which is needed for conversion to the TensorRT-LLM format.

cd TensorRT-LLM/examples/gpt/

rm -rf gpt2 && git clone https://huggingface.co/gpt2 gpt2

cd gpt2

rm model.safetensors

cd ..

Handling Missing .bin Models

In cases where the GPT-2 model repository lacks a pytorch_model.bin, we use a script to generate it. The script loads the model and tokenizer using the Transformers library and saves the model state in .bin format. It also removes .safetensors files if present, ensuring compatibility with the conversion process.

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

import os

import glob

def save_model_as_bin(model_name, save_directory):

# Load the model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Create save directory if it doesn't exist

os.makedirs(save_directory, exist_ok=True)

# Save the model and tokenizer

model.save_pretrained(save_directory)

tokenizer.save_pretrained(save_directory)

# Save the model in .bin format

torch.save(model.state_dict(), os.path.join(save_directory, "pytorch_model.bin"))

# Remove .safetensors files if they exist

for safetensors_file in glob.glob(os.path.join(save_directory, "*.safetensors")):

os.remove(safetensors_file)

# Usage

save_model_as_bin("model_id", "TensorRT-LLM/examples/....")

Converting Weights to TensorRT-LLM Format

The conversion step transforms the Hugging Face model weights into the format required by TensorRT-LLM. This involves specifying the input directory, output path, tensor parallelism (1 in this case), and using float16 for storage, optimizing inference performance.

python3 TensorRT-LLM/examples/gpt/hf_gpt_convert.py -i TensorRT-LLM/examples/gpt/gpt2 -o ./c-model/gpt2 --tensor-parallelism 1 --storage-type float16

Building the TensorRT Engine

Now, we build the TensorRT engine, which is a highly optimized representation of the model ready for fast inference. This script uses the converted model files and specifies options like using the GPT attention plugin and removing input padding, further enhancing performance.

python3 TensorRT-LLM/examples/gpt/build.py --model_dir=./c-model/gpt2/1-gpu --use_gpt_attention_plugin --remove_input_padding

Inference Using Llama-index

We can use the Llama-index library to perform inference with the optimized TensorRT engine.

pip install llama-index llama-index-llms-nvidia-tensorrt

After installing the necessary Llama-index packages, the code instantiates a LocalTensorRTLLM object, providing the paths to the engine, tokenizer, and other parameters.

from llama_index.llms.nvidia_tensorrt import LocalTensorRTLLM

llm = LocalTensorRTLLM(

model_path="./engine_outputs",

engine_name="gpt_float16_tp1_rank0.engine",

tokenizer_dir="gpt2",

max_new_tokens=15,

)

It then demonstrates a simple completion task, prompting the model with "once upon a time" and printing the generated text...

resp = llm.complete("once upon a time")

print(str(resp))

Deploy with Triton Inference Server

Here, we start by cloning the TensorRT-LLM backend repository specific to version 0.9.0 and copying the model files from the c-model/gpt2/1-gpu directory into the Triton backend model directory.

git clone -b v0.9.0 https://github.com/triton-inference-server/tensorrtllm_backend.git

cp c-model/gpt2/1-gpu/* tensorrtllm_backend/all_models/inflight_batcher_llm/tensorrt_llm/1

This prepares the backend with the necessary model files for deployment.

Update some configuration

Next, we configure TensorRT-LLM to support in-flight batching by creating configuration files for different components. This includes filling in templates for preprocessing, postprocessing, and the main model configuration using the fill_template.py script. In-flight batching enables the processing of requests together to improve throughput and reduce latency.

HF_LLAMA_MODEL=/content/TensorRT-LLM/examples/gpt/gpt2

ENGINE_PATH=tensorrtllm_backend/all_models/inflight_batcher_llm/tensorrt_llm/1

python3 tensorrtllm_backend/tools/fill_template.py -i tensorrtllm_backend/all_models/inflight_batcher_llm/preprocessing/config.pbtxt \

tokenizer_dir:${HF_LLAMA_MODEL},tokenizer_type:auto,triton_max_batch_size:64,preprocessing_instance_count:1

python3 tensorrtllm_backend/tools/fill_template.py -i tensorrtllm_backend/all_models/inflight_batcher_llm/postprocessing/config.pbtxt \

tokenizer_dir:${HF_LLAMA_MODEL},tokenizer_type:auto,triton_max_batch_size:64,postprocessing_instance_count:1

python3 tensorrtllm_backend/tools/fill_template.py -i tensorrtllm_backend/all_models/inflight_batcher_llm/tensorrt_llm_bls/config.pbtxt \

triton_max_batch_size:64,decoupled_mode:True,bls_instance_count:1,accumulate_tokens:False

python3 tensorrtllm_backend/tools/fill_template.py -i tensorrtllm_backend/all_models/inflight_batcher_llm/ensemble/config.pbtxt \

triton_max_batch_size:64

python3 tensorrtllm_backend/tools/fill_template.py -i tensorrtllm_backend/all_models/inflight_batcher_llm/tensorrt_llm/config.pbtxt \

triton_max_batch_size:64,decoupled_mode:True,max_beam_width:1,engine_dir:${ENGINE_PATH},max_tokens_in_paged_kv_cache:2560,max_attention_window_size:2560,kv_cache_free_gpu_mem_fraction:0.5,exclude_input_in_output:True,enable_kv_cache_reuse:False,batching_strategy:inflight_fused_batching,max_queue_delay_microseconds:0

Configuration settings such as triton_max_batch_size, decoupled_mode (For streaming), and batching_strategy are specified to optimize performance.

Serving the Model

To serve the model, we run the Triton Inference Server within a Docker container. The docker run command sets up the server with GPU support, mounts the current directory to the container, and specifies the working directory.

docker run -it --rm --gpus all --network host --shm-size=1g -v $(pwd):/tensorrtllm_backend --workdir /tensorrtllm_backend nvcr.io/nvidia/tritonserver:24.03-trtllm-python-py3

We can launch the Triton server using a provided Python script, setting the model repository path and world size to configure the server for model inference.

python3 tensorrtllm_backend/scripts/launch_triton_server.py \

--model_repo tensorrtllm_backend/all_models/inflight_batcher_llm \

--world_size 1

Testing the Deployment

Here we test the deployed model by sending a request to the Triton server's API endpoint. The Python script constructs a payload with input text and parameters, sends a POST request to the model endpoint, and prints the response.

import requests

url = "http://localhost:8000/v2/models/ensemble/generate"

payload = {

"text_input": "Say this is a test.",

"parameters": {

"max_tokens": 128,

"stop_words": [""]

}

}

response = requests.post(url, json=payload)

if response.status_code == 200:

print("Response:", response.json())

else:

print(f"Error {response.status_code}: {response.text}")

This verifies that the model is correctly served and can generate responses as expected.

Streaming Test :

import requests

url = "http://localhost:8000/v2/models/ensemble/generate_stream"

payload = {

"text_input": "Tell me a short joke about llamas",

"parameters": {

"max_tokens": 128,

"stop_words": [""],

"stream": True

}

}

# Send a POST request with streaming enabled

response = requests.post(url, json=payload, stream=True)

if response.status_code == 200:

# Process the streaming response

for line in response.iter_lines():

if line:

# Print or process each line of the stream

print(line.decode('utf-8'))

else:

print(f"Error {response.status_code}: {response.text}")

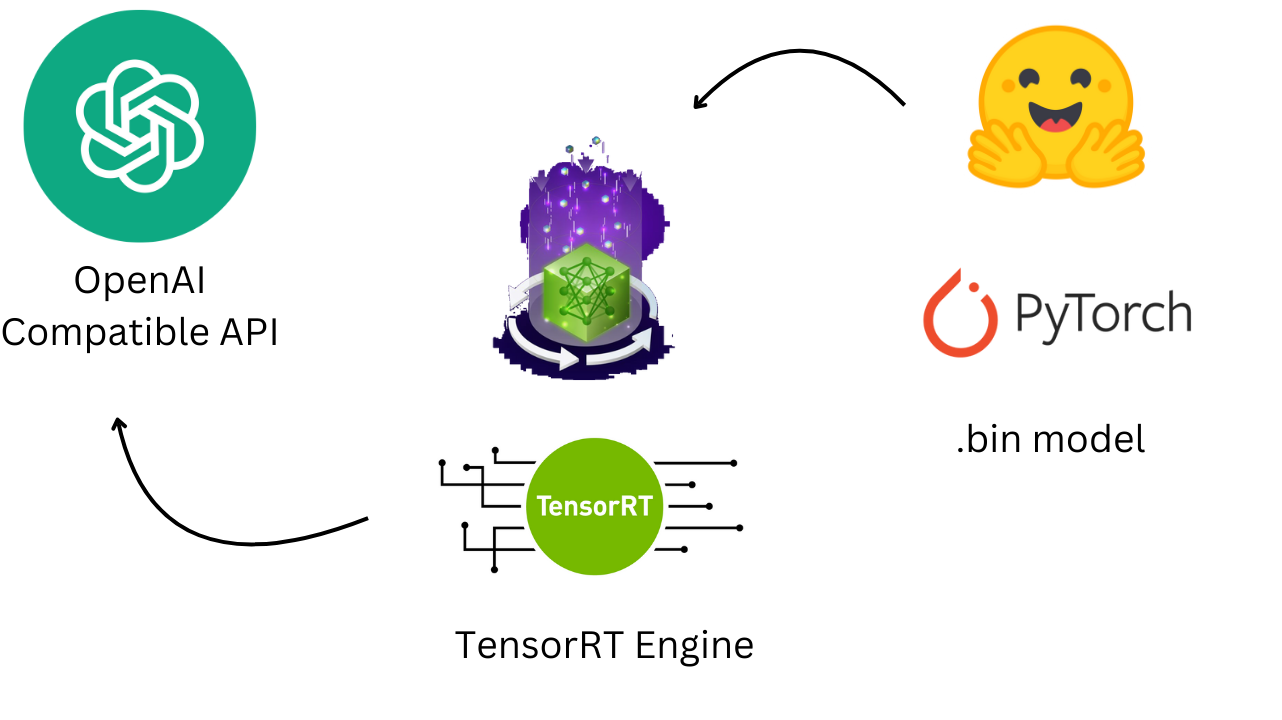

Setting an OpenAI-compatible API

To integrate TensorRT-LLM with OpenAI-compatible APIs, we first clone the openai_trtllm repository, which provides a bridge between OpenAI’s API format and the Triton Inference Server.

git clone --recursive https://github.com/npuichigo/openai_trtllm.git

Ensure Rust is installed, then build the project using Cargo.

cargo run --release

This generates the necessary binary files for running the API server.

Running the OpenAI-Compatible API

After building the source code, we run the openai_trtllm binary to start the API server. We configure the server with options such as host, port, Triton endpoint...

./target/release/openai_trtllm --help

Usage: openai_trtllm [OPTIONS]

Options:

-H, --host <HOST>

Host to bind to [default: 0.0.0.0]

-p, --port <PORT>

Port to bind to [default: 3000]

-t, --triton-endpoint <TRITON_ENDPOINT>

Triton gRPC endpoint [default: http://localhost:8001]

-o, --otlp-endpoint <OTLP_ENDPOINT>

Endpoint of OpenTelemetry collector

--history-template <HISTORY_TEMPLATE>

Template for converting OpenAI message history to prompt

--history-template-file <HISTORY_TEMPLATE_FILE>

File containing the history template string

--api-key <API_KEY>

Api Key to access the server

-h, --help

Print help

The openai_trtllm --help command displays available options for customizing the server's behavior.

Testing the OpenAI-Compatible API

./target/release/openai_trtllm

Openai Client :

import pprint

from openai import OpenAI

client = OpenAI(base_url="http://localhost:3000/v1", api_key="test")

result = client.completions.create(

model="ensemble",

prompt="Say this is a test",

)

pprint.pprint(result)

Streaming Test :

from sys import stdout

from openai import OpenAI

client = OpenAI(base_url="http://localhost:3000/v1", api_key="test")

response = client.completions.create(

model="ensemble",

prompt="This is a story of a hero who went",

stream=True,

max_tokens=50,

)

for event in response:

if not isinstance(event, dict):

event = event.model_dump()

event_text = event["choices"][0]["text"]

stdout.write(event_text)

stdout.flush()

Langchain ChatOpenAI :

from langchain.chat_models import ChatOpenAI

from langchain.schema.messages import HumanMessage, SystemMessage

chat = ChatOpenAI(openai_api_base="http://localhost:3000/v1",

openai_api_key="test", model_name="ensemble",

max_tokens=100)

messages = [

SystemMessage(content="You're a helpful assistant"),

HumanMessage(content="What is the purpose of model regularization?"),

]

result = chat.invoke(messages)

print(result.content)

Deploying TensorRT-LLM with Triton Inference Server and setting up an OpenAI-compatible API enables efficient, high-performance inference for large language models. By leveraging in-flight batching and optimizing model configurations, you can achieve significant improvements in throughput and latency. The integration with Triton Inference Server allows for seamless model serving, while the OpenAI-compatible API facilitates easy integration with existing applications. This setup not only enhances performance but also provides flexibility in deploying and interacting with advanced AI models locally or in production environments.