Optimizing Models with TensorRT

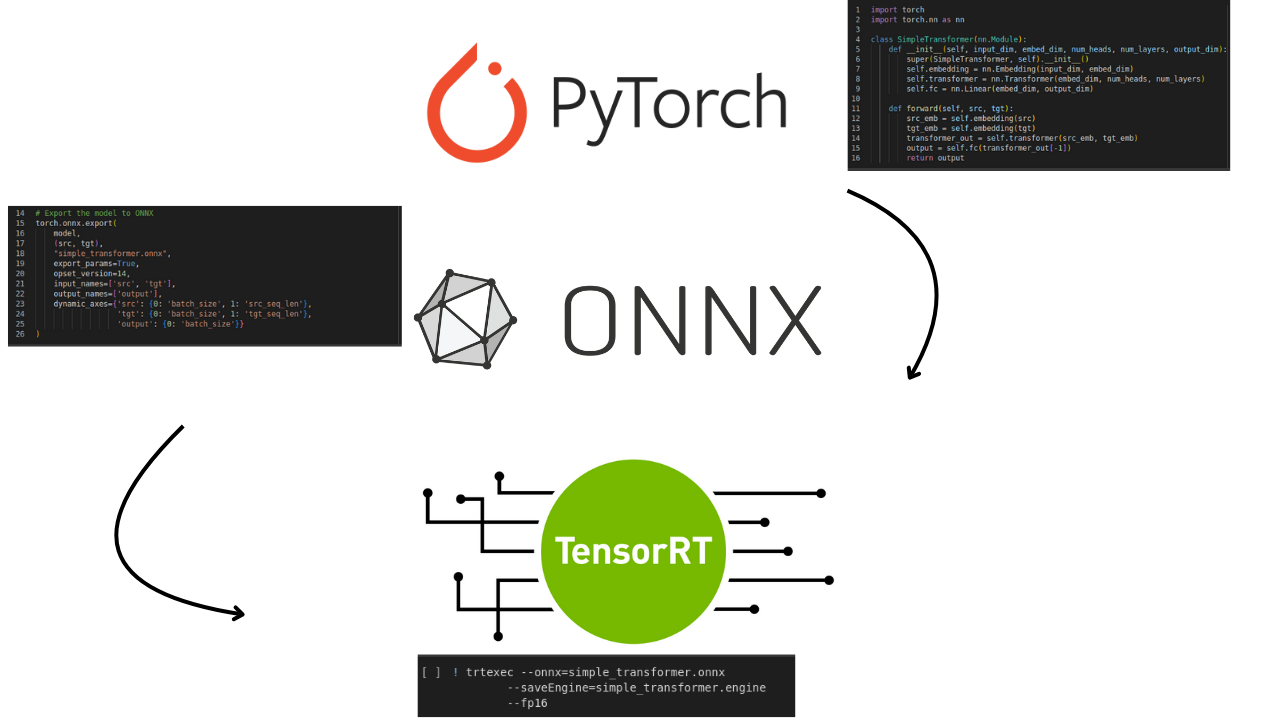

The field of deep learning is rapidly evolving, with models becoming increasingly complex and larger in scale. While frameworks like PyTorch are excellent for development and experimentation, deploying these models efficiently for inference requires targeted optimization. This is crucial for reducing latency, enhancing throughput, and improving resource efficiency, especially when scaling to production or deploying on devices with limited computing power.

TensorRT plays a key role in this process, offering powerful optimizations specifically designed for NVIDIA GPUs, enabling faster and more efficient inference. Although we explored the ONNX format to better understand different model conversion techniques, the real focus lies in TensorRT’s ability to bridge the gap between research and real-world deployment. In upcoming blogs, we’ll delve into more advanced tools like TensorRT-LLM and Torch-TensorRT, which further enhance performance for deep learning models on NVIDIA hardware.

ONNX

ONNX (Open Neural Network Exchange) is an open-source framework designed to promote interoperability between various deep learning tools and platforms by providing a standardized model format. Developed by Microsoft and Facebook, ONNX allows models trained in frameworks like PyTorch or TensorFlow to be easily transferred and used in other environments, such as Azure ML or NVIDIA TensorRT. It defines a common representation and set of operators for deep learning tasks, ensuring compatibility across different systems. With broad tool and hardware support, ONNX facilitates seamless model deployment and integration, enhancing flexibility and streamlining transitions between different machine learning ecosystems.

TensorRT

TensorRT is NVIDIA's high-performance deep learning inference optimizer and runtime library designed to accelerate the deployment of neural network models on NVIDIA GPUs. It optimizes trained models by applying techniques like layer fusion, precision calibration (e.g., FP16, INT8), and kernel auto-tuning to enhance inference speed and reduce memory usage. Supporting models from frameworks like TensorFlow, PyTorch, and ONNX, TensorRT converts them into an optimized format for efficient execution on NVIDIA GPUs. By leveraging hardware-specific features and reducing precision, TensorRT significantly improves inference performance while minimizing latency and computational resource requirements.

Code Time

Installation of Required Packages

First, install the necessary packages for TensorRT and the Python dependencies required for ONNX and PyCUDA, the apt-get command installs TensorRT, while pip installs specific versions of PyCUDA, ONNX, and ONNX Runtime to ensure compatibility and functionality for the subsequent steps.

! apt-get install tensorrt

! pip install pycuda==2024.1.2 onnx==1.16.2 onnxruntime==1.19.0

Definition of the Transformer Model in PyTorch

In this section, a simple Transformer architecture model is defined using PyTorch ,the SimpleTransformer class includes an embedding layer, a Transformer layer, and a fully connected layer.

import torch

import torch.nn as nn

import onnxruntime as ort

import numpy as np

import time

# Define the SimpleTransformer class

class SimpleTransformer(nn.Module):

def __init__(self, input_dim, embed_dim, num_heads, num_layers, output_dim):

super(SimpleTransformer, self).__init__()

self.embedding = nn.Embedding(input_dim, embed_dim)

self.transformer = nn.Transformer(embed_dim, num_heads, num_layers)

self.fc = nn.Linear(embed_dim, output_dim)

def forward(self, src, tgt):

src_emb = self.embedding(src)

tgt_emb = self.embedding(tgt)

transformer_out = self.transformer(src_emb, tgt_emb)

output = self.fc(transformer_out[-1]) # Get output from the last token

return output

# Model parameters

input_dim = 100 # vocabulary size

embed_dim = 512

num_heads = 8

num_layers = 6

output_dim = 10 # number of output classes

model = SimpleTransformer(input_dim,

embed_dim,

num_heads,

num_layers,

output_dim)

We sets up the model structure and specifies parameters such as embedding dimensions, number of heads, and layers, which are crucial for our inference phase.

Exporting the Model to ONNX Format

# Create dummy input tensors for PyTorch

src = torch.randint(0, input_dim, (5, 1)) # (src_seq_len, batch_size)

tgt = torch.randint(0, input_dim, (5, 1))

# Export PyTorch model to ONNX format

torch.onnx.export(

model,

(src, tgt),

"simple_transformer.onnx",

export_params=True,

opset_version=17,

input_names=['src', 'tgt'],

output_names=['output'],

dynamic_axes={'src': {0: 'src_seq_len', 1: 'batch_size'},

'tgt': {0: 'tgt_seq_len', 1: 'batch_size'},

'output': {0: 'batch_size'}}

)

This block converts our PyTorch model into the ONNX format. We create sample input tensors and then leverage the torch.onnx.export function to perform the conversion.

key parameters like

opset_versionand dynamic axis specification are included for compatibility and flexibility.

Converting ONNX Model to TensorRT Engine

! /usr/src/tensorrt/bin/trtexec --onnx=simple_transformer.onnx --saveEngine=simple_transformer.engine --fp16

This command utilizes the trtexec tool from TensorRT. It takes the ONNX model we just created and generates a highly optimized TensorRT engine, simple_transformer.engine, leveraging FP16 precision for faster inference.

TensorRT Inference Class

import tensorrt as trt

import pycuda.driver as cuda

import pycuda.autoinit

import numpy as np

class TensorRTInference:

def __init__(self, engine_path):

# Load the TensorRT engine

self.logger = trt.Logger(trt.Logger.INFO)

with open(engine_path, "rb") as f, trt.Runtime(self.logger) as runtime:

self.engine = runtime.deserialize_cuda_engine(f.read())

self.context = self.engine.create_execution_context()

self.stream = cuda.Stream()

def infer(self, src_input, tgt_input):

# Allocate buffers for input and output

input_shapes = [(1, 5)] * 2 # Adjust based on your input shapes (batch_size=1, seq_len=5)

output_shape = (1, 10) # Adjust based on your output shape

# Create host and device buffers

h_input_src = np.array(src_input, dtype=np.int32).reshape(input_shapes[0])

h_input_tgt = np.array(tgt_input, dtype=np.int32).reshape(input_shapes[1])

h_output = np.empty(output_shape, dtype=np.float32)

d_input_src = cuda.mem_alloc(h_input_src.nbytes)

d_input_tgt = cuda.mem_alloc(h_input_tgt.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

# Copy inputs to device

cuda.memcpy_htod(d_input_src, h_input_src)

cuda.memcpy_htod(d_input_tgt, h_input_tgt)

# Execute inference

self.context.execute_v2([int(d_input_src), int(d_input_tgt), int(d_output)])

# Copy output back to host

cuda.memcpy_dtoh(h_output, d_output)

return h_output

This Python class encapsulates the process of performing inference using the TensorRT engine, it loads the engine, allocates memory on the GPU, copies input data, executes the inference, and retrieves the output.

Generating Input Data

We generate here random input tensors src and tgt to use as input for our model in different formats, these tensors simulate real-world data that would be fed into the Transformer model.

# Create dummy input tensors for PyTorch

src = torch.randint(0, input_dim, (5, 1)) # (src_seq_len, batch_size)

tgt = torch.randint(0, input_dim, (5, 1))

Measuring PyTorch Inference Time

# Measure PyTorch inference time

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.eval()

model.to(device)

src, tgt = src.to(device), tgt.to(device)

start_time = time.time()

with torch.no_grad():

torch_output = model(src, tgt)

end_time = time.time()

torch_inference_time = end_time - start_time

print(f"PyTorch Inference time: {torch_inference_time:.6f} seconds")

This code measures the inference time of the original PyTorch model, it sends the model and input data to the appropriate device (GPU if available) and performs inference within a torch.no_grad context to avoid gradient calculations.

The output :

PyTorch Inference time: 0.009506 seconds

Measuring ONNX Runtime Inference Time

The inference time for the ONNX model is measured using ONNX Runtime.

# Load the ONNX model

onnx_model_path = "simple_transformer.onnx"

session = ort.InferenceSession(onnx_model_path)

# Prepare inputs for ONNX Runtime

src_input = src.cpu().numpy().astype(np.int64) # Convert to numpy and adjust dtype

tgt_input = tgt.cpu().numpy().astype(np.int64)

# Measure ONNX Runtime inference time

start_time = time.time()

onnx_output = session.run(['output'], {'src': src_input, 'tgt': tgt_input})

end_time = time.time()

onnx_inference_time = end_time - start_time

print(f"ONNX Inference time: {onnx_inference_time:.6f} seconds")

The code here loads the ONNX model, prepares the input data, and evaluates the model, this timing provides insights into the performance of the model in an ONNX Runtime environment.

The output :

ONNX Inference time: 0.024360 seconds

Measuring TensorRT Inference Time

Finally this block measures the inference time for the TensorRT engine by performing inference using the TensorRT optimized model.

# Initialize TensorRT inference

engine_path = "simple_transformer.engine"

trt_infer = TensorRTInference(engine_path)

src_input = src.cpu().numpy().astype(np.int64) # Convert to numpy and adjust dtype

tgt_input = tgt.cpu().numpy().astype(np.int64)

# Measure TensorRT inference time

start_time = time.time()

output = trt_infer.infer(src_input, tgt_input)

end_time = time.time()

# Calculate inference time

trt_inference_time = end_time - start_time

print(f"TensorRT Inference time: {trt_inference_time:.6f} seconds")

The output :

TensorRT Inference time: 0.002033 seconds

This highlights the performance benefits achieved through TensorRT's optimizations and provides a comparative benchmark against the PyTorch and ONNX Runtime timings.

Performance Analysis

In our performance analysis of our small Transformer architecture model, we observed the inference times for different formats and frameworks on a Tesla T4 GPU.

The recorded times were as follows:

- PyTorch Inference Time:

0.009506seconds - ONNX Inference Time:

0.024360seconds - TensorRT Inference Time:

0.002033seconds

These results reflect the performance of our model in its native PyTorch environment, after conversion to the ONNX format, and finally optimized using TensorRT.

PyTorch provided a baseline inference time of 0.009506 seconds, demonstrating the model's performance within its native framework.

ONNX, although valuable for model interoperability, exhibited a slower inference time of 0.024360 seconds. This result is expected since ONNX prioritizes compatibility rather than speed optimization.

While there is a more direct path using Torch-TensorRT, which offers in-framework compilation of PyTorch inference code for NVIDIA GPUs, we intentionally converted the model to ONNX as part of our learning process. This allowed us to explore and better understand model conversion and interoperability between different frameworks.

TensorRT demonstrated the most significant performance improvement, with an inference time of just 0.002033 seconds. This substantial reduction in inference time highlights TensorRT's effectiveness in optimizing model performance for NVIDIA GPUs. Its specialized optimizations, such as precision calibration and layer fusion, contribute to these impressive results.

Our study underscores the importance of framework and model optimization. While ONNX serves a crucial role in model conversion and interoperability, TensorRT provides substantial performance gains, particularly for deployment on NVIDIA hardware.

In conclusion, optimizing deep learning models for deployment is crucial to bridging the gap between research and practical application. While PyTorch is ideal for model development and experimentation, tools like TensorRT are essential for maximizing performance during inference, particularly on NVIDIA GPUs. Through our exploration, we've seen how converting models and leveraging TensorRT’s optimizations can drastically reduce latency and improve efficiency. As deep learning continues to evolve, mastering these techniques will be key to scaling AI solutions in real-world environments.

Happy optimizing !!